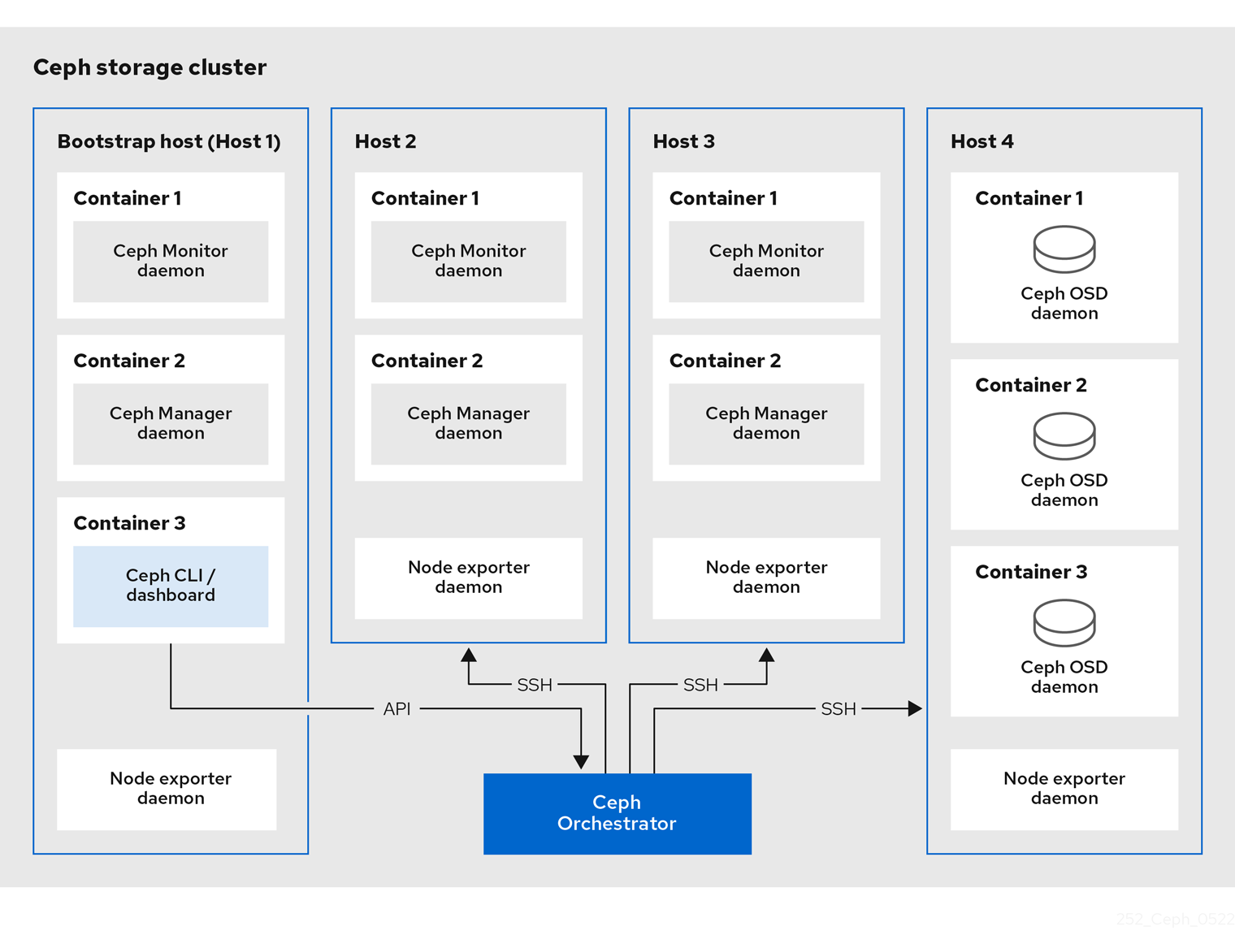

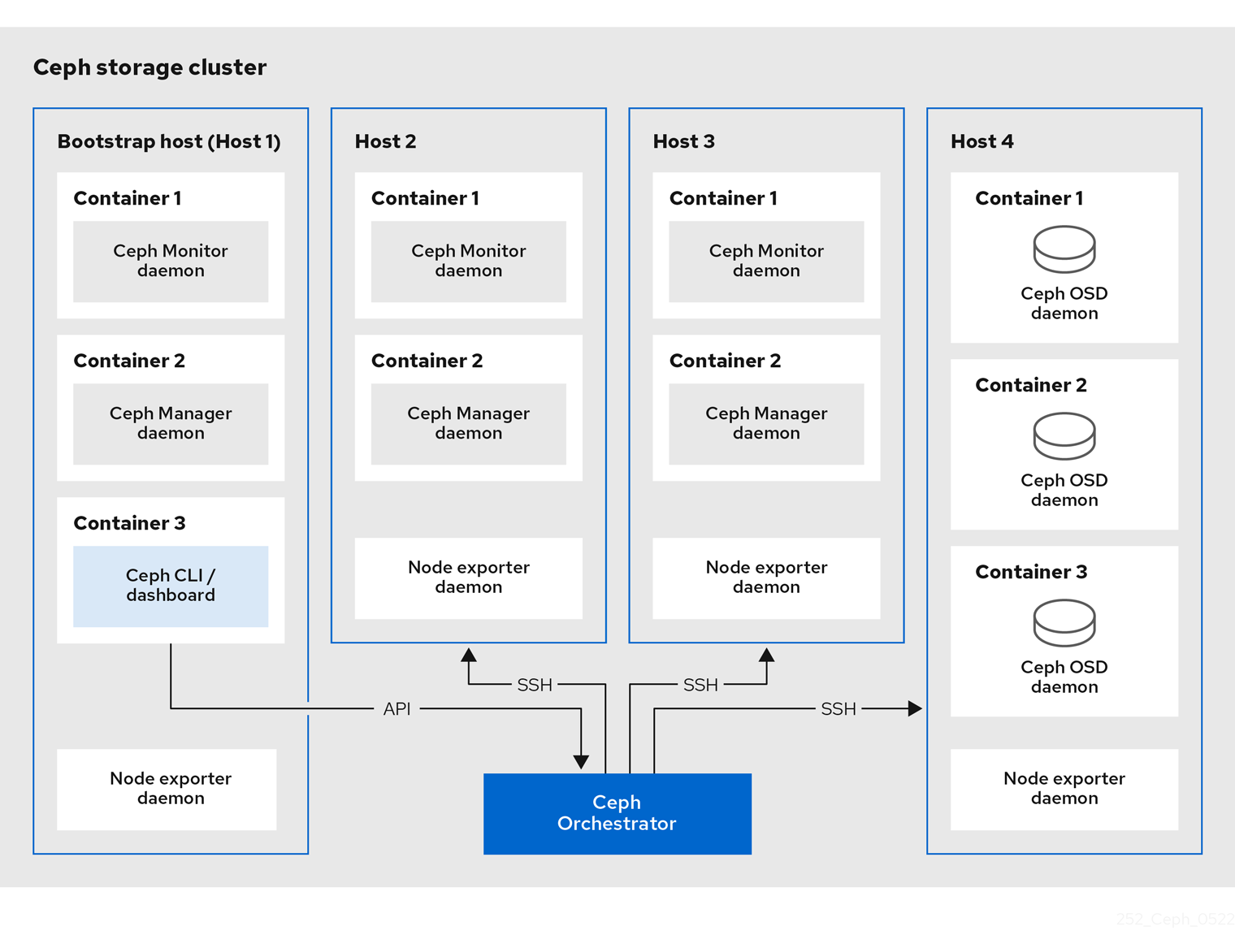

#Cephadm命令使用ssh与存储集群中的节点通信

无需使用外部工具即可添加、删除或更新Ceph Storage容器

在引导过程中生成ssh密钥对或使用您自己的ssh密钥

#Cephadm Bootstrapping过程

在单一节点上创建一个小型存储集群

包含一个MOG和一个MGR以及任何需要的依赖项(监控堆栈组件)

#初始化集群以后通过横向拓展来完善集群

可以使用Ceph命令行或Ceph仪表板来添加集群节点

Cephadm仅支持Octopus以后的版本

Cephadm需要容器支持(以Podman或Docker的形式)

Cephadm默认使用Podman作为容器引擎

Cephadm需要Python3(Python3.6或更高版本)

Cephadm需要systemd

Cephadm需要时间同步

#全部节点上都要进行安装

#卸载podman

dnf remove -y podman

#安装docker

bash <(curl -sSL https://linuxmirrors.cn/docker.sh)

#调整配置文件

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://dockerhub.swireb.cn"]

}

EOF

#重启服务

systemctl daemon-reload && systemctl restart docker

#仅在Bootstrapping节点上安装Cephadm

#直接下载Cephadm二进制文件(推荐这种安装方法)

CEPH_RELEASE=18.2.4

curl --silent --remote-name --location https://download.ceph.com/rpm-${CEPH_RELEASE}/el9/noarch/cephadm

chmod +x cephadm

mv cephadm /usr/sbin

#通过官方YUM源安装Cephadm(会默认安装podman不推荐)

dnf search release-ceph #搜索系统支持的版本

dnf install -y centos-release-ceph-reef #安装指定版本的YUM源

dnf install -y cephadm #安装Cephadm

dnf remove -y podman #卸载podman(默认会作为依赖安装)

#安装Ceph命令行工具

dnf search release-ceph #搜索系统支持的版本

dnf install -y centos-release-ceph-reef #安装指定版本的YUM源

dnf install epel-release #命令行工具有部分依赖在epel源中

dnf install -y ceph-common #通过dnf安装ceph命令行工具

#可以指定特定的Python来运行Cephadm(极少的情况Cephadm无法调用系统的Python)

python3.9 cephadm version

#确认版本

cephadm version

ceph --version

#检查节点是否满足要求(指定hostname时需要配置SSH免密)

cephadm check-host [--expect-hostname HOSTNAME]

#为Cephadm准备节点(指定hostname时需要配置SSH免密)

cephadm prepare-host [--expect-hostname HOSTNAME]

#Bootstrap的过程简述

在本地主机上为新集群创建MON、MGR守护进程

为Ceph集群生成新的SSH密钥并将其添加到root用户的/root/.ssh/authorized_keys

生成公钥文件/etc/ceph/ceph.pub

生成最小配置文件/etc/ceph/ceph.conf

生成client.admin的特权文件/etc/ceph/ceph.client.admin.keyring

添加_admin标签到引导主机

何具有此标签的主机也将获得/etc/ceph/ceph.conf的副本和/etc/ceph/ceph.client.admin.keyring的副本

#当引导集群时会自动生成此SSH密钥且不需要额外的配置

#Bootstrap语法

cephadm --docker bootstrap --mon-ip <ip-addr> --ssh-private-key <private-key-filepath> --ssh-public-key <public-key-filepath>

#node1节点上进行操作

cephadm bootstrap --mon-ip 192.168.0.101 --cluster-network 172.26.0.0/24

Verifying podman|docker is present...

Verifying lvm2 is present...

Verifying time synchronization is in place...

Unit chronyd.service is enabled and running

Repeating the final host check...

docker (/usr/bin/docker) is present

systemctl is present

lvcreate is present

Unit chronyd.service is enabled and running

Host looks OK

Cluster fsid: ec79e2f8-4ca3-11ef-a144-000c295daf9b

Verifying IP 192.168.0.101 port 3300 ...

Verifying IP 192.168.0.101 port 6789 ...

Mon IP `192.168.0.101` is in CIDR network `192.168.0.0/24`

Mon IP `192.168.0.101` is in CIDR network `192.168.0.0/24`

Pulling container image quay.io/ceph/ceph:v18...

Ceph version: ceph version 18.2.4 (e7ad5345525c7aa95470c26863873b581076945d) reef (stable)

Extracting ceph user uid/gid from container image...

Creating initial keys...

Creating initial monmap...

Creating mon...

Waiting for mon to start...

Waiting for mon...

mon is available

Assimilating anything we can from ceph.conf...

Generating new minimal ceph.conf...

Restarting the monitor...

Setting public_network to 192.168.0.0/24 in mon config section

Setting cluster_network to 172.26.0.0/24

Wrote config to /etc/ceph/ceph.conf

Wrote keyring to /etc/ceph/ceph.client.admin.keyring

Creating mgr...

Verifying port 0.0.0.0:9283 ...

Verifying port 0.0.0.0:8765 ...

Verifying port 0.0.0.0:8443 ...

Waiting for mgr to start...

Waiting for mgr...

mgr not available, waiting (1/15)...

mgr not available, waiting (2/15)...

mgr not available, waiting (3/15)...

mgr not available, waiting (4/15)...

mgr is available

Enabling cephadm module...

Waiting for the mgr to restart...

Waiting for mgr epoch 5...

mgr epoch 5 is available

Setting orchestrator backend to cephadm...

Generating ssh key...

Wrote public SSH key to /etc/ceph/ceph.pub

Adding key to root@localhost authorized_keys...

Adding host node1...

Deploying mon service with default placement...

Deploying mgr service with default placement...

Deploying crash service with default placement...

Deploying ceph-exporter service with default placement...

Deploying prometheus service with default placement...

Deploying grafana service with default placement...

Deploying node-exporter service with default placement...

Deploying alertmanager service with default placement...

Enabling the dashboard module...

Waiting for the mgr to restart...

Waiting for mgr epoch 9...

mgr epoch 9 is available

Generating a dashboard self-signed certificate...

Creating initial admin user...

Fetching dashboard port number...

Ceph Dashboard is now available at:

URL: https://node1:8443/

User: admin

Password: l3ym2yyy2n

Enabling client.admin keyring and conf on hosts with "admin" label

Saving cluster configuration to /var/lib/ceph/ec79e2f8-4ca3-11ef-a144-000c295daf9b/config directory

Enabling autotune for osd_memory_target

You can access the Ceph CLI as following in case of multi-cluster or non-default config:

sudo /usr/sbin/cephadm shell --fsid ec79e2f8-4ca3-11ef-a144-000c295daf9b -c /etc/ceph/ceph.conf -k /etc/ceph/ceph.client.admin.keyring

Or, if you are only running a single cluster on this host:

sudo /usr/sbin/cephadm shell

Please consider enabling telemetry to help improve Ceph:

ceph telemetry on

For more information see:

https://docs.ceph.com/en/latest/mgr/telemetry/

Bootstrap complete.

#相关仪表盘地址

https://node1:8443 #Dashboard

https://node1:3000 #Grafana

http://node1:9095 #Prometheus